Jie Fu (付杰) is a happy and funny research scientist at IQuest Research, chasing his human-friendly big AI1 dream.

He was a postdoctoral fellow (funded by Microsoft Research Montreal) supervised by Yoshua Bengio at University of Montreal, Quebec AI Institute (Mila). He was an IVADO postdoctoral fellow supervised by Chris Pal at Polytechnique Montreal, Quebec AI Institute (Mila). He obtained his PhD from National University of Singapore under the supervision of Tat-Seng Chua. He received outstanding paper awards at NAACL 2024, ICLR 2021, and ACM Gordon Bell Prize (COVID-19 Track) 2022 Finalist.

His engineering/research portfolio is centered on scaling what works and inventing what’s missing, to preserve and flourish humanity, and the key techniques include: LLMs (NAACL 24’), deep reinforcement learning (ICML 24’), autoformalization, neural-symbolic machines, meta learning (ICLR 24’), reasoning (arXiv 25’), safe AI (arXiv alignment survey 23’), etc.

-

Also means 💜, 爱, 愛, あい ↩︎

I’m (still…) continual-training myself (slowly…)

arXiv

Oct 1, 2025

arXiv

Jul 23, 2025

ACL 2025, Oral (243 out of 8360 submissions)

Feb 27, 2025

NAACL 2024, Outstanding Paper Award, (6 out of 2434 submissions)

Jun 29, 2024

NeurIPS 2024 Spotlight

May 30, 2024

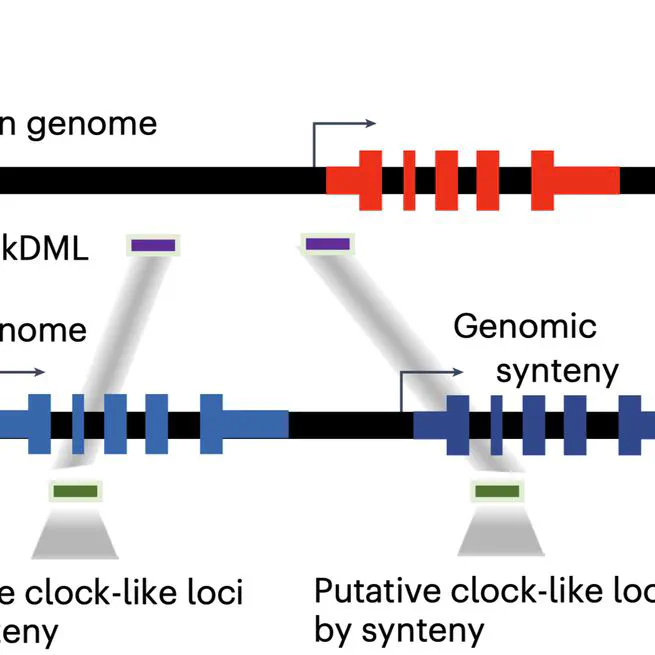

Nature Biotechnology 2024

May 20, 2024

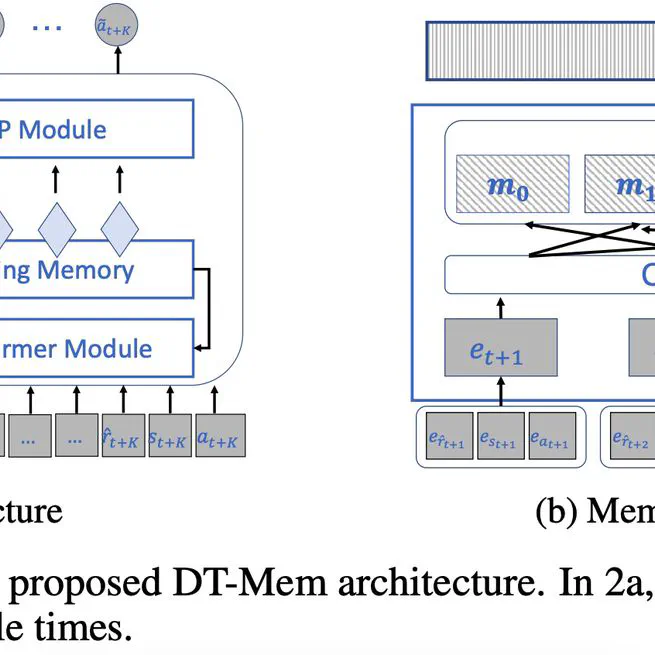

ICML 2024

May 19, 2024

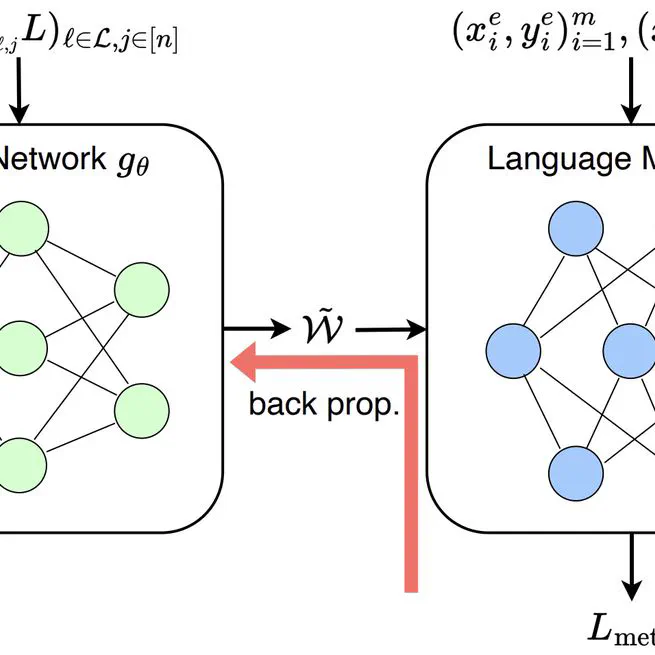

ICLR 2024

Jan 16, 2024

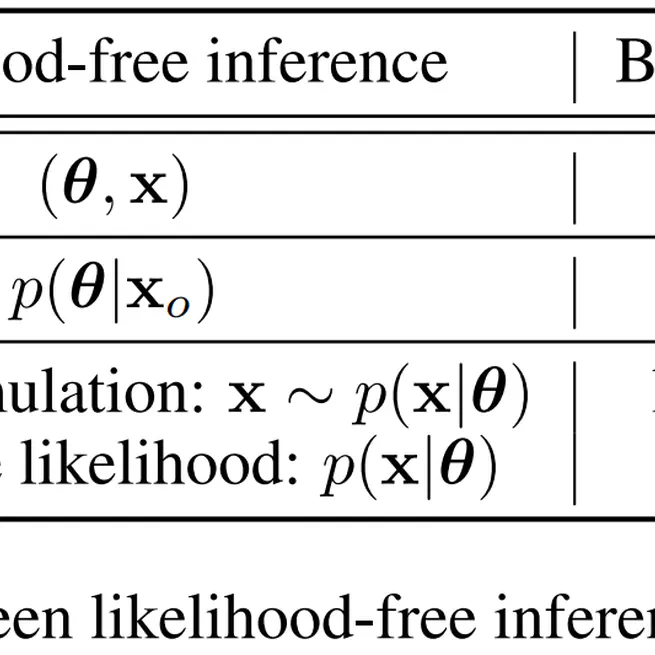

ICLR 2022 Spotlight

Jan 20, 2022

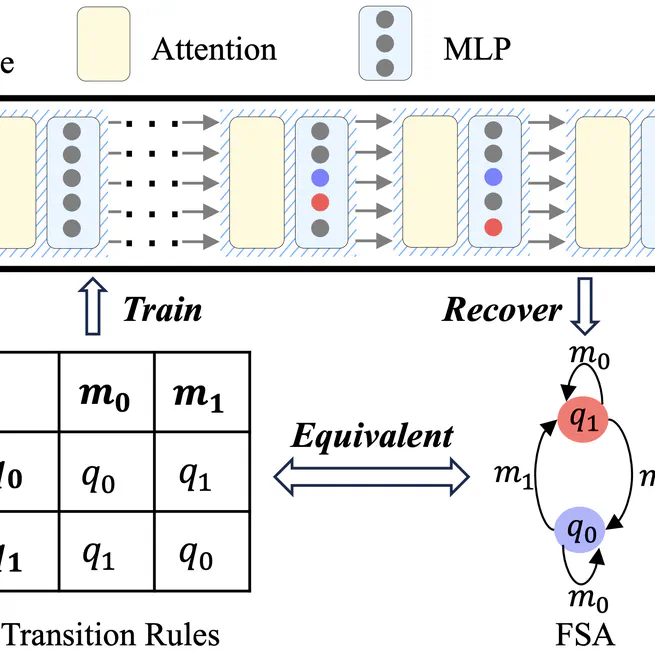

ICLR 2021 Outstanding Paper Award (8 out of 2997 submissions)

Apr 2, 2021